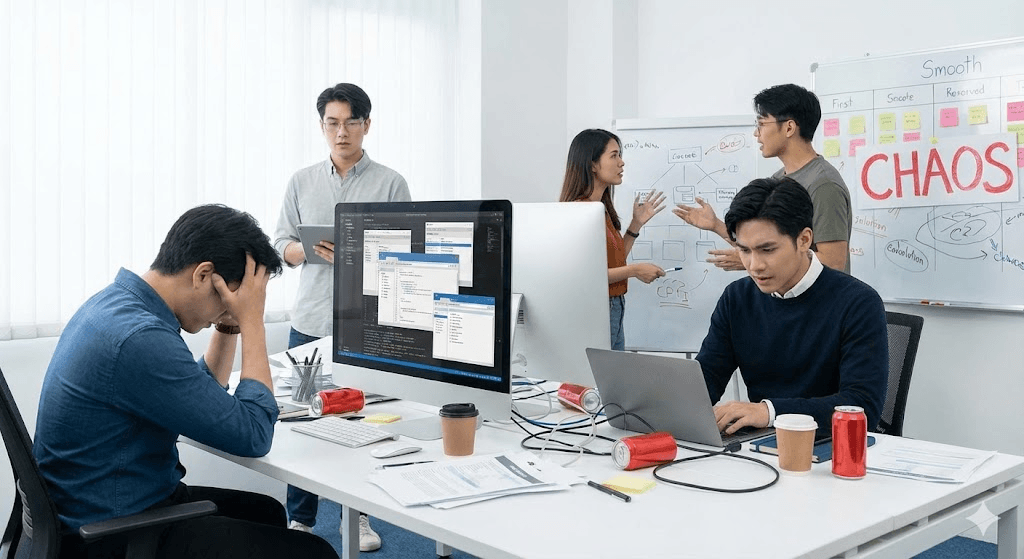

Smooth: Disciplined Velocity

This is where speed emerges from clarity, not chaos. Systems are built with explicit contracts, robust error handling, and predictable behavior. AI tools amplify productivity within guardrails, enabling the team to move fast without breaking down.

- • Clear domain models & schemas

- • Explicit error channels & resource management

- • AI-assisted dev with validation loops

- • Observable systems for proactive debugging